This article is produced and financed by University of Oslo - read more

Scientists call for reform on rankings and indicators of science journals

Researchers are used to being evaluated based on indicators like the impact factors of the scientific journals in which they publish papers, and their number of citations. A team of 14 natural scientists from nine countries are now rebelling against this practice, arguing that obsessive use of indices is damaging the quality of science.

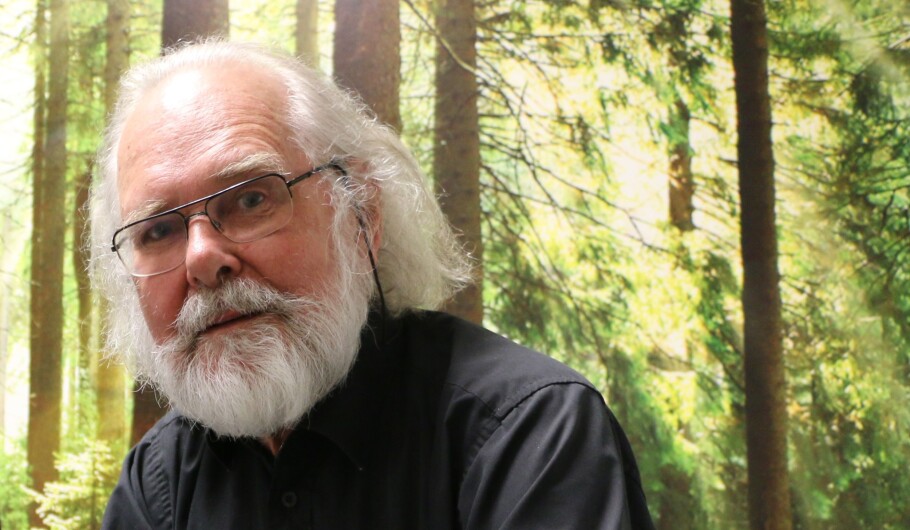

“Our message is quite clear: Academics should stop worrying too much about indices. Instead, we should work more on the scholarship and the quality of research”, says Professor Colin Chapman from the Department of Anthropology at the George Washington University in Washington.

“The exaggerated reliance on indices is taking attention away from the quality of the science. The system works just fine for experienced researchers like Colin Chapman and myself, but younger researchers and their careers are suffering because of the way indices are used today”, adds Professor Nils Chr. Stenseth at the University of Oslo.

Indices don’t measure quality

Chapman and Stenseth are two of the authors behind an attention-grabbing paper in the scientific journal Proceedings of the Royal Society B, about the “games academics play” and the consequences for the future of academia. One of the problems with indices is that people can play games with them to better their scores, according to the paper.

The basis for the rebellion is that research is a highly competitive profession where evaluation plays a central role. When a team of researchers – researchers don’t usually work alone these days – have made a new discovery, they write a detailed academic article about the method and the results. The next step is to send the article to a scientific journal, which may accept or reject the paper.

The editors of the journal usually send the article to a small group of independent scientific experts. Based on their reviews, the editors decide if the paper should be accepted or rejected. The reviewers and the editors may also suggest revisions before the paper is accepted.

When the scientific paper finally is published, other researchers in related fields may start citing the paper in their own works. A high number of citations is often perceived as a measure of scientific quality.

The process is not just fine

Viewed from the outside, this process seems just fine. But during the last 10 or 15 years, the process has started to unravel. The quality of science has become less important, according to Chapman, Stenseth, and their co-authors. Instead, the general attention focuses too much on a small set of easy to measure-indicators: impact factors and individual h-indexes, in addition to the number of citations.

There are a lot of scientific journals out there, and a handful of them are so prestigious that their names are known to the general public. The journals Nature, Science, and The Lancet are among the most famous. If a young scientist in the natural or medical sciences can publish a paper in one of these journals, his or her career can get a huge boost.

The most prestigious journals also have high impact factors, which are defined as average number of citations per paper published during the preceding two years. An individual researcher’s h-index considers the researcher's best-cited papers and the number of citations that they have received.

“Scores for these indices have great consequences. For example, in some countries and disciplines, publication in journals with impact factors lower than 5.0 is officially considered to have no value”, according to the Proceedings of the Royal Society paper. The authors also point out that it is not uncommon to hear that the only articles that count are the ones in journals with an impact factor that is above an arbitrary value. Or worse, that publishing in lower-tier journals weakens CVs.

Even a flaw can create citations

A general problem is that the indices can be manipulated in ways that are making them next to worthless as a measure of scientific achievement or quality. Professor Stenseth was on an evaluation committee for the European Research Council some 10 years ago, and he is still astonished about the experience.

“We discussed the use of indices and soon split into two groups. At the time, you had to do a lot of manual work in order to calculate the number of citations. Those of us that did not consider the number of citations as very important read the actual articles instead”, recounts professor Stenseth.

That became a watershed moment for Stenseth, because the citation-skeptical researchers soon discovered that some of the most highly cited papers were commentaries. A commentary doesn’t as a rule contain any new discoveries.

“But the worst thing was that we discovered that some papers were highly cited because there was a flaw in them! A lot of papers identified the error in the paper, but it was still listed as a citation! That’s one of the reasons I insist that a high number of citations is not a proof of excellent scientific quality”, adds Stenseth.

Professor Chapman in Washington, talking to Professor Stenseth in Oslo via Skype, confirms that he has had similar experiences.

“This is the way the system works. Observations like that may seem almost funny, but they are also terrible. And sad,” Chapman comments.

Indices as surrogate measures

The authors of the Proceedings of the Royal Society paper are not alone in their criticism. The San Francisco Declaration on Research Assessment (DORA) originated from a meeting of the American Society for Cell Biology in 2012, and recommends that journal impact factors should not be used as a surrogate measure of an individual research article, to assess an individual scientist's contribution, nor in hiring, promotion or funding decisions. Some 15 000 individuals and 1550 organizations worldwide have signed this petition, but change has yet to come.

“Even though there is an extensive body of literature criticizing these indices and their use, they are still being widely used in important and career-determining ways. This needs to stop”, says Colin Chapman.

Science is paramount

Colin Chapman and Nils Chr. Stenseth emphasize that science and scholarship is paramount in a modern society.

“That’s why we got into this business; to discover new things. But what’s happening instead, is that we keep getting crushed by the system. The system says that we should not care about improving our science, we should instead care about increasing our indexes. That is not an incentive for creating excellent science”, Chapman insists.

The paper contains a list of the possible games academics can play – and may have to play – in order to improve their indices.

“One of the most common games is to write a paper with a long list of authors, like a sort of favour. The idea is that you can be on my paper, even if you didn’t make a real contribution, so maybe I can be on your paper next. You scratch my back, and I’ll scratch yours”, Chapman explains.

Another game is that researchers can agree to cite each other: “I can cite your paper if you cite mine”. Publishers have even organized “citation cartels” where authors are recommended to cite articles from other journals in the cartel.

“I sent a paper to a journal of applied ecology some years ago. The paper was accepted, and the editor told us that we should cite four or five more papers, all from that journal. That of course increases the journals impact factor, because it increases the citations of papers in that journal. This has been the case for many journals and is now considered to be a bad practice”, Stenseth explains.

Supportive reactions

Colin Chapman and Nils Chr. Stenseth have received quite a few supportive reactions to their paper.

“One of my colleagues wrote a blog, criticizing us for writing something that could be discouraging for young scientists. But what we were really trying to do, was to make young researchers aware of the fact that this is a flawed system which should be mended”, Chapman counters.

“My opinion is that indices, and the way they are used, take attention away from the science. In some small fields, which by necessity will have a very low index, it is nearly impossible to get very high citations even if the research is excellent and important”, Stenseth says.

“Instead, the attention is about where your paper was published, how many citations it generated, and the impact factor of the journal”, he adds.

The authors also have suggestions about how to improve the system.

“The short answer is that academics should stop looking at indices and get back to reading the papers”, Chapman says. But academics can’t do that alone, if research councils and other funding agencies continue to use the indices as a basis for decisions about funding. One simple way of improving the system is that research councils should not ask researchers about the number of citations when they submit a research proposal.

“We would like to see changes in the incentive systems, rewarding quality research and guaranteeing transparency. Senior faculty should establish the ethical standards, mentoring practices and institutional evaluation criteria to create the needed changes”, Chapman and Stenseth both insist.

“We hope to spark a discussion that will shape the future of academia and move it in a more positive direction. Scientists represent some of the most creative minds that can address societal needs. It is now time to forge the future we want», conclude the 14 researchers who wrote the paper in the Proceedings of the Royal Society.

Reference:

C.A. Chapman et.al:«Games academics play and their consequences: how authorship, h-index and journal impact factors are shaping the future of academia». Proceedings of the Royal Society B. Published 04 December 2019.