THIS ARTICLE/PRESS RELEASE IS PAID FOR AND PRESENTED BY NTNU Norwegian University of Science and Technology - read more

A robotic microplankton sniffer dog

The microscopic, free-floating algae called phytoplankton — and the tiny zooplankton that eat them — are notoriously difficult to count. Researchers need to know how a warming climate will affect them both. A new kind of smart, lightweight autonomous underwater vehicle (LAUV) can help.

Marine phytoplankton, or plant plankton, are incredibly important to life on Earth. As they go about their work of turning sunlight into energy, they produce fully 50 per cent of the oxygen we breathe.

It’s no wonder that researchers want to know what climate change and a warming ocean might do to these tiny floating oxygen factories, especially since they serve as the basis of marine food webs and thus support the production of zooplankton and fish.

But counting and identifying plankton is incredibly hard. It’s like looking for a zillion tiny needles in an enormous haystack — except that both the haystack and the needles are constantly moving around in the vast reaches of the ocean, and over space and time.

Now, an interdisciplinary collaboration between NTNU researchers and their colleagues from SINTEF Ocean is developing a smart robotic lightweight autonomous underwater vehicle (LAUV) that’s programmed to find and identify different groups of plankton.

The five-year project, called AILARON, was granted NOK 9.5 million by the Research Council of Norway in 2017. Earlier this spring, researchers took the LAUV out to the rough Norwegian coast on a test drive.

Image, analyse, plan and learn

Researchers from the university’s Departments of Engineering Cybernetics, Marine Technology and Biology are all part of the collaborative.

What’s special here is that the LAUV uses the entire processing chain of imaging, machine learning, hydrodynamics, planning and artificial intelligence to 'image, analyse, plan and learn' as it does its work.

As a result, the robot can even estimate where the floating organisms are headed, so that researchers can collect more information about the plankton as the organisms ride the ocean currents. Think of the LAUV as a robotic version of a real drug sniffer dog, if the dog could both identify drugs in a bag and tell its handlers where the bag was headed.

“What our LAUV does is improve accuracy, reduce measurement uncertainty and accelerate our ability to sample plankton with high resolution, both in space and time,” said Annette Stahl, an associate professor at NTNU’s Department of Engineering Cybernetics who is head of the AILARON project.

Current approaches limited, time-consuming

Sampling phytoplankton using conventional methods is very time consuming and can be expensive.

“Analyses of phytoplankton samples, especially at a high temporal and spatial resolution, can cost quite a lot,” says Nicole Aberle-Malzahn, an associate professor at NTNU’s Department of Biology, who is part of the project.

The upside of the more conventional methods is that they can provide a lot of information, however, especially when it comes to species composition and biodiversity.

But most of the boat-based or moored samplers just provide snapshots in space or time, or if the information is collected via satellite, a really big picture of what’s going on in the ocean, without much detail.

Enter the robotic LAUV sniffer dog.

Robotic revolution meets artificial intelligence

The robot LAUV that’s being refined by the AILARON research group looks like a small, slender torpedo.

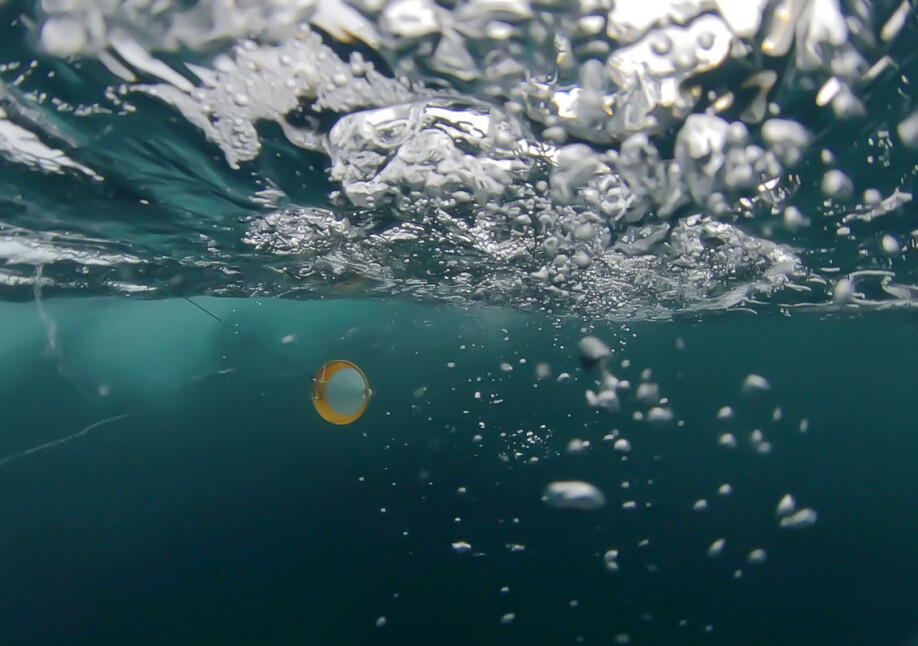

It has a camera that takes images of the plankton in the upper layers of the ocean, in an area called the photic zone, which is as deep as the sunlight can penetrate. It is also equipped with chlorophyll, conductivity, depth, oxygen, salinity, and temperature and hydrodynamic (DVL) sensors.

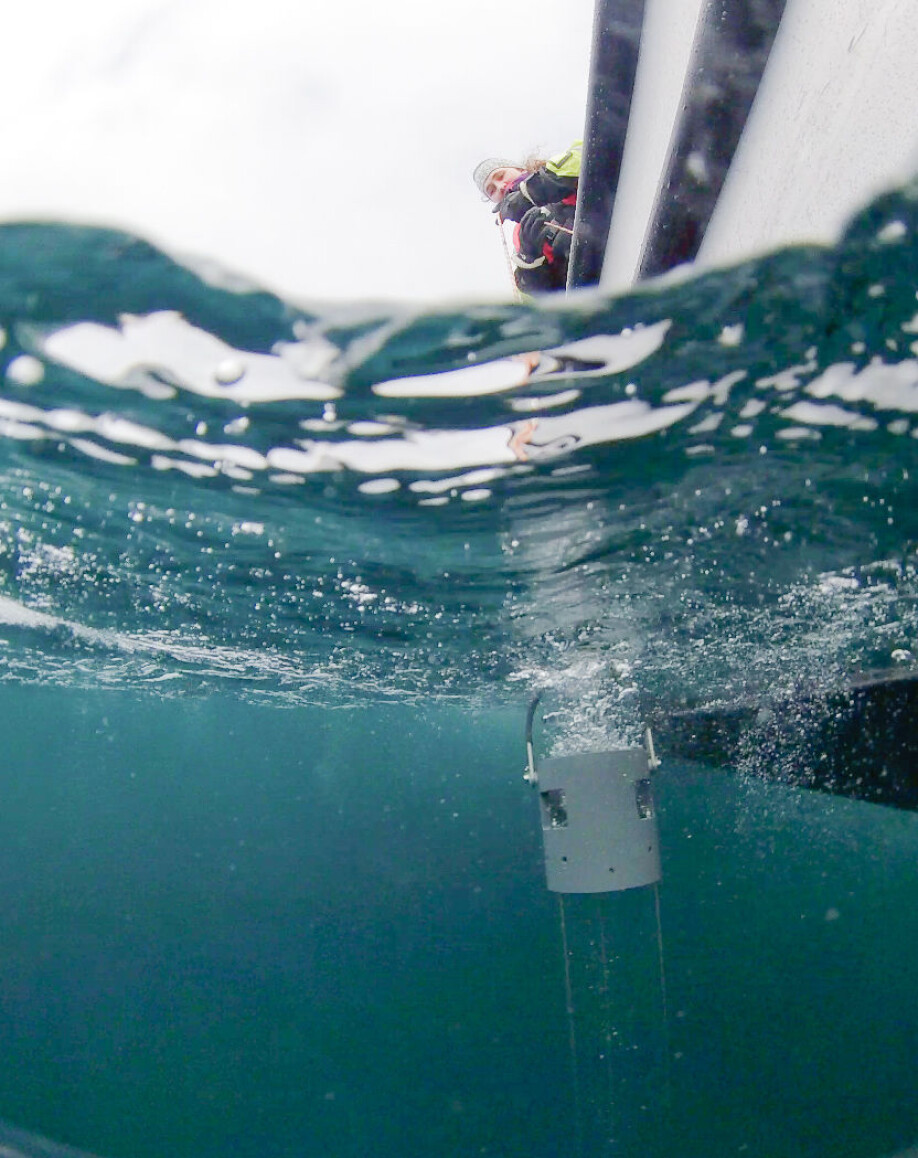

In a recent field effort coordinated by Joseph Garrett, a postdoctoral researcher at NTNU’s Department of Engineering Cybernetics, an interdisciplinary group of scientists gathered at the Mausund Fieldstation, on a tiny island at the mid-Norwegian coast about a three-hour drive from Trondheim.

The aim was to catch the spring bloom event, when the phytoplankton responds to the increased sunlight associated with the spring, and its biomass starts to explode.

The researchers, led by Tor Arne Johansen, a professor at NTNU’s Department of Engineering Cybernetics, used hyperspectral imaging from both drones and small aircraft to provide phytoplankton estimates from above the water surface. They also had satellite images to provide chlorophyll estimates from space. Finally, the LAUV and plankton sampling team sent their devices on track to follow the bloom in time and space.

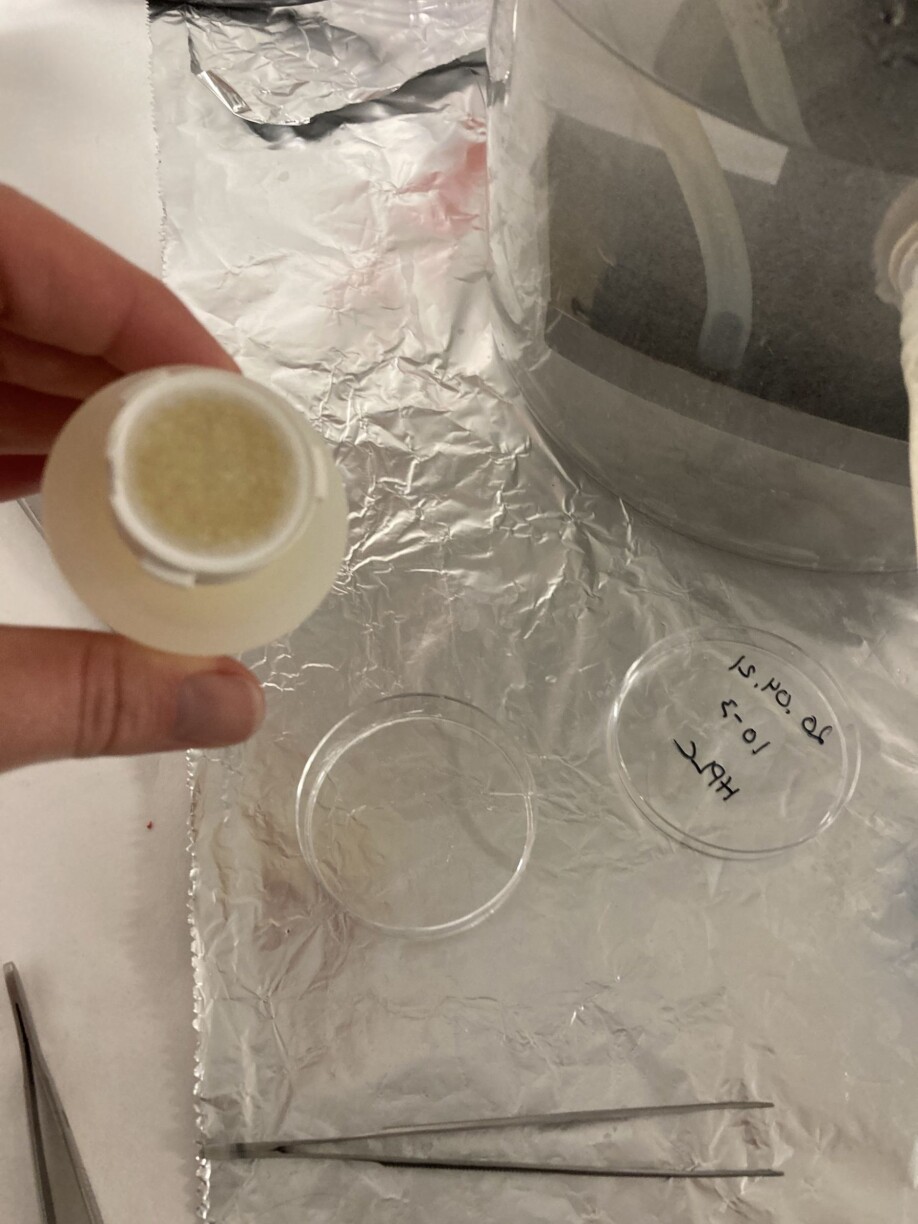

The scientists confirmed that the phytoplankton was 'blooming' by filtering seawater. When the bright white filters turned brown, they knew that the phytoplankton production in the water column was in high gear.

Training the sniffer dog

The AUV can look at the images and classify them right away, because it has been 'taught' over time to recognize different groups of plankton from the images it takes.

The on-board computer also generates a probability-density map to show the areal extent of the organisms that it has detected.

The LAUV can also decide to return to previously detected hotspots with that contain species of interest in the area that they surveyed. Here’s where human handlers can play a role, because they can 'talk' to the LAUV if needed.

Researchers can also change the LAUV’s sampling preferences on the fly in response to what it finds, which is why they call it a kind of sniffer dog — it can detect samples of interest and map out a volume where a research ship could come and do follow-on sampling.

The information collected by the sensors when the LAUV is taking its samples can help determine the spread and volume of the targeted creatures before the LAUV goes to the next hotspot.

Can predict where currents are headed

Plankton can’t swim against currents. Instead, they float and are advected by currents. That means researchers need to know what is happening with currents.

The sniffer dog LAUV has equipment that allows it to create an estimate of local currents at different depth layers. It then calculates a model that will allow it to predict where the plankton are headed, and which can help the LAUV decide where it should go next.

The sampling and processing of the images by the LAUV is a process that is called iterative, meaning that the sampling is repeated and refined. It’s like training a sniffer dog with thousands of training sessions.

The overall goal is for the LAUV to be able to visit plankton hotspot after it conducts an initial 'fixed lawn mower' survey — which is pretty much what it sounds like.

“The goal is for us to be able to understand community structures and dispersion in relation to water column biological processes,” said Stahl. “And the use of the LAUV allows us to collect this information — for example, our LAUV can operate for as long as 48 hours.”

Lots of detail in time and space

Using smart LAUV technologies helps to assess the biological, physical and chemical conditions in a given area with a high temporal and spatial resolution, Stahl said.

“We could never obtain this kind of resolution using traditional plankton sampling approaches,” she said. “Projects such as AILARON can thus help to advance our knowledge on ecosystem status and increase our possibilities for ecosystem surveillance and management under future ocean conditions.”

Geir Johnson, a marine biologist at NTNU’s Department of Biology (NTNU), and a key scientist at the university’s Centre for Autonomous Marine Operations and Systems (AMOS) agrees.

“We want to get an overview of species distribution, biomass and health status as a function of time and space,” he said. “But to do this we need to use instrument-carrying underwater robots.”

Reference:

Aya Saad et.al.: Advancing Ocean Observation with an AI-Driven Mobile Robotic Explorer. Oceanography, 2020.

See more content from NTNU:

-

Researchers now know more about why quick clay is so unstable

-

Many mothers do not show up for postnatal check-ups

-

This woman's grave from the Viking Age excites archaeologists

-

The EU recommended a new method for making smoked salmon. But what did Norwegians think about this?

-

Ragnhild is the first to receive new cancer treatment: "I hope I can live a little longer"

-

“One in ten stroke patients experience another stroke within five years"