An article from NordForsk

Finding the subatomic needle in the haystack

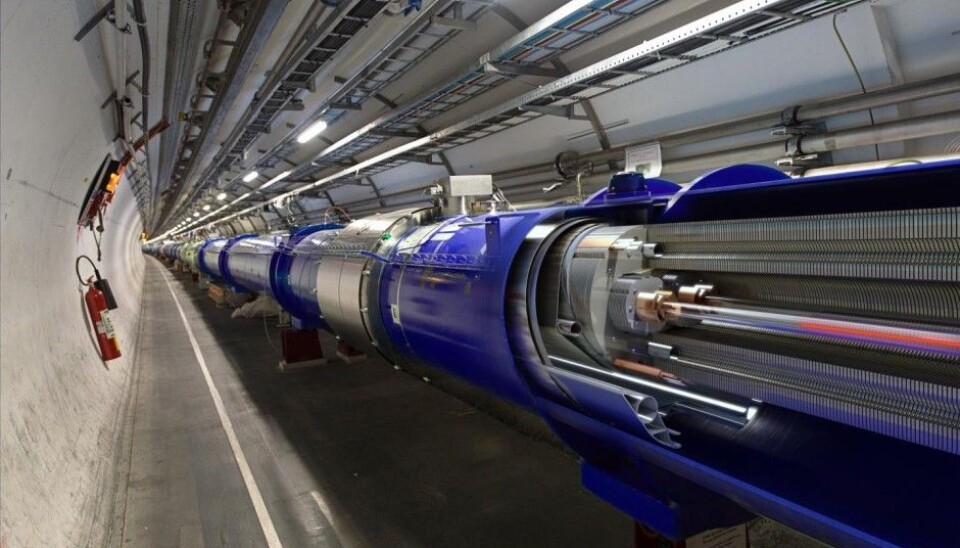

Two of the greatest mysteries in physics today are the nature of dark matter and the behavior of the gravitational force at a subatomic level. The scientists at CERN are hard at work to solve these mysteries with the ATLAS super digital camera at the LHC collider. But these high tech instruments are just the beginning.

Denne artikkelen er over ti år gammel og kan inneholde utdatert informasjon.

At CERN scientists accelerate and smash together tiny particles at incredible speeds with the LHC in order to recreate the conditions of the very early universe.

The goal is to discover new phenomena which theories beyond the standard model of particle physics, based on observations at microscopic and larger scales, say exist, but which haven't yet been observed or detected under controlled conditions.

The need for statistical significance

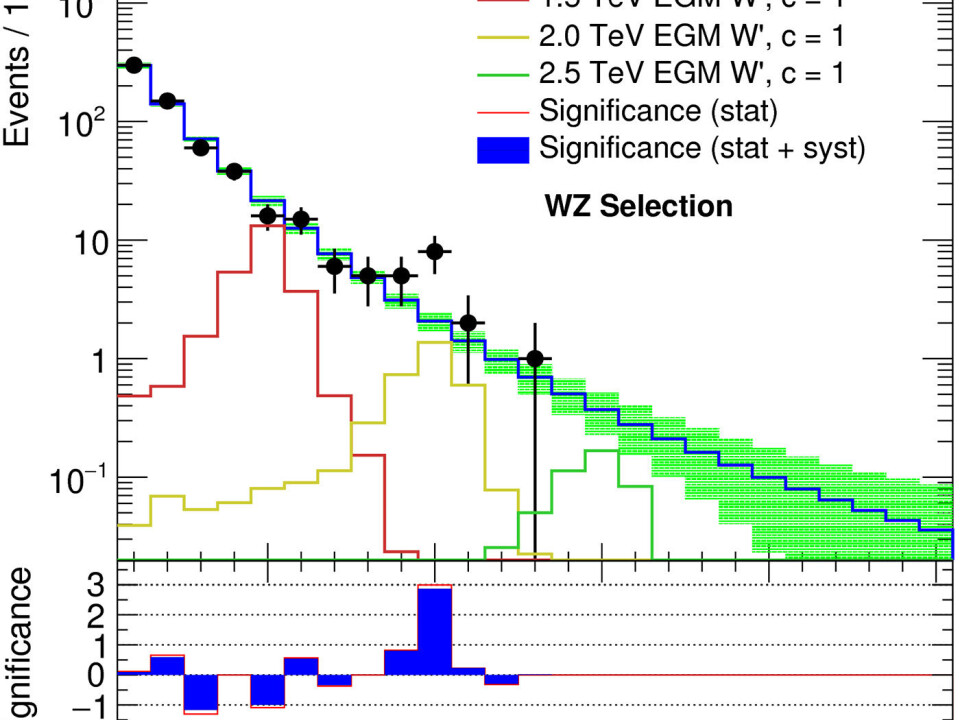

Several rare but important elemental particles have been found, such as the Higgs particle in 2012. But finding new elementary particles is nevertheless like searching for a needle in a haystack.

"The phenomena we are looking for are extremely rare, and it is possible to be fooled by already known background processes that we just haven't understood all aspects of, and which could show up as signal in the data itself", says Farid Ould-Saada, professor in experimental particle physics at the Department of Physics at the University of Oslo.

Professor Ould-Saada is project leader for the Norwegian High Energy Particle Physics Project, chair of the NorduGrid collaboration, and researcher working with the ATLAS experiment at CERN.

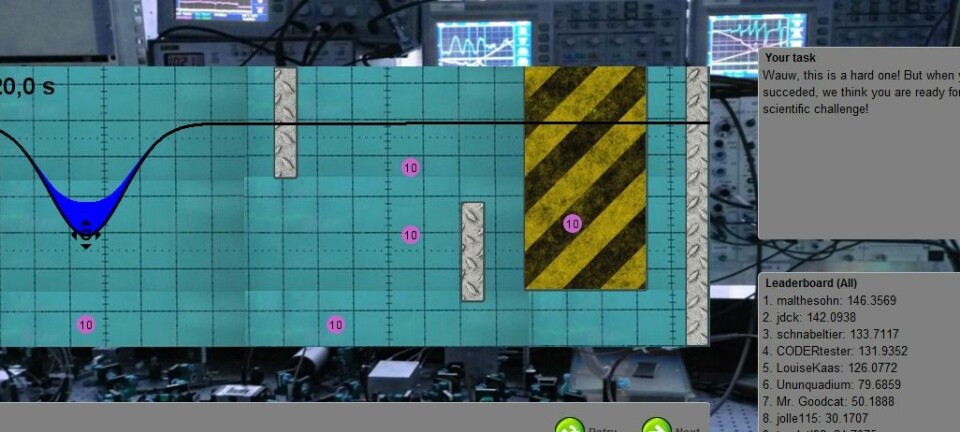

To find new elementary particles, physicists usually look for a "bump" in the data that is statistically significant and not caused by known particles.

But in order to reach this statistical significance and spot the subatomic needle in the haystack, the experiments need to be repeated a high enough number of times that statistical fluctuations or false bumps become highly improbable.

This, in turn, creates an enormous amount of data.

A network of supercomputers

So much data in fact, that when CERN analyzed the sums of money that would have to be transferred to build a large enough computing center there, they concluded that it would be cheaper and easier to distribute the data to physics institutes and supercomputing centers around the world.

This makes up the World-wide LHC Computing Grid (WLCG) and includes more than 140 datacenters in 33 countries.

Connecting these datacenters are solid and stable high-speed internet connections and specialized software that allow the network of datacenters to quickly transfer the data from where it is generated, to long-term storage, to where other computing resources can transform the raw data into tables and plots for the physicists.

In the Nordic countries this task is handled by six academic supercomputing sites in Bergen, Copenhagen, Espoo, Linköping, Oslo, and Umeå. This is coordinated by NeIC, a NordForsk activity, to provide the storage and computing in ways that suits the international collaboration.

"Building on a long tradition of Nordic collaboration, going back to the NorduGrid collaboration from 2001, we pool resources from many sites to provide the large-scale services the LHC experiments need", says Mattias Wadenstein, head of the LHC activities at NeIC.

Limiting factors for unlimited research

This supercomputing infrastructure allows physicists to record data from the LHC detectors at CERN, distribute the information, and make it accessible to researchers all over the world.

"The accelerators and detectors are like "time-traveling microscopes" that take pictures of the particle collisions under conditions that prevailed just after the big bang. Without the datacenters, it would be like having an extremely advanced camera but not being able to look at or store any pictures from it", says Professor Ould-Saada.

Which part of the research chain is currently the limiting factor for progress in particle physics?

"We always strive to reach the edge of all our knowledge and instruments and equipment, to gain higher energies in the accelerators and higher levels of detection. This means even more data, which of course requires higher data processing capabilities and adapted software techniques", Ould-Saada says.

Thus, the limiting factor can be found in all parts of the chain, from the accelerator's power output all the way to the processing power and the speed and amount of storage available in the computing infrastructure. And this in turn drives research to improve all parts of the chain.

"The equipment and instruments we have today is never enough to find out what we want to know tomorrow, and that is part of the game", Professor Ould-Saada admits.

The mysteries of time and space

One of the biggest mysteries in science today is dark matter. Astronomical observations tell us that 85 percent of the matter in the universe is made of dark matter. We can't see dark matter, but we can detect its physical effects.

But since dark matter only rarely interacts with ordinary matter, we don't know what dark matter is. Is it a type of elementary particle we just haven't discovered yet?

"From astronomical measurements we know dark matter exists, and we know that we don't know what it is. This is actually a big plus because now we know something is missing and want to find it", Ould-Saada says.

Therefore, physicists at CERN try to recreate dark matter at the LHC to study the nature of this mysterious phenomenon.

Professor Ould-Saada has no estimate as to when the mystery will be solved or whether it ever will. A dark matter particle, if it exists, is not produced easily and may be an extremely rare event. This was the case with the Higgs particle. Detectors had to be improved before the particle could be discovered.

But since dark matter interacts very weakly with normal matter, it is rare and difficult to detect. Like the aurora borealis in southern Norway, it may happen, but only occasionally.

"Thus, we need many observations before we may finally see dark matter, and in order to discover such new and rare phenomena we accumulate as many collisions as possible until a significant "bump" appears in the data", says Professor Ould-Saada.

Is it worth it?

Another great mystery is the nature of gravity on the particle level. Gravity is much weaker than the other natural forces and the exchange particle that transmits this force has not yet been discovered.

To study gravity at the subatomic scale the physicists wish to enhance the force of gravity. They are therefore looking for extra dimensions in addition to the three we usually experience, where gravity may be stronger. Today the standard model of particle physics operates with as many as 10 or 11 dimensions.

But is the fundamental research in physics worth the money spent on it?

"My answer is that the research and all that which may come out of it is really worth it. If at some point in history we had decided to stop doing fundamental research, we would for example had no electricity, no phone, or no internet today. Our lives are based on fundamental research. We must therefore push the questions as far as we can", Professor Ould-Saada concludes.